So much of the modern age is wrapped up in technology.

The advancements and the rapid change to modern life from technology might seem instantaneous, but it's down to the work of long hours and the ability of people who see what could be instead of what is.

Here's our pick of the ten most pioneering people in modern technology.

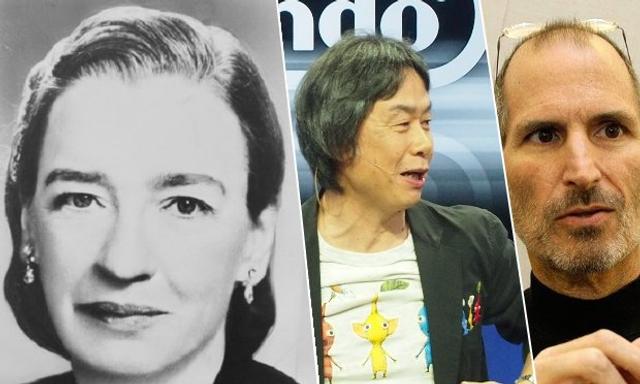

10. SHIGERU MIYAMOTO

If you've played a Nintendo, or indeed any kind of gaming console, you have Shigeru Miyamoto to thank for that. As a games designer for Nintendo, Miyamoto saw that players were becoming less focused on attaining high scores like they had in arcades and more focused on storytelling and progression. It wasn't about trying to reach as many points as possible, but more about completion. Moreover, Miyamoto's ethos of design is truly unique - Nintendo rarely, if ever, uses focus groups and instead relies on a simple discipline - if it's fun to play, then it's fun for everyone. The list of games that Miyamoto created is nothing short of incredible - the very first Super Mario Bros., the original Legend of Zelda, F-Zero, Legend of Zelda: Ocarina Of Time and StarFox - and he hasn't even come close to slowing down yet. Just this past week, Miyamoto announced that Super Mario is finally coming to the smartphone market with Super Mario Run.

.jpg)

9. GRACE MURRAY HOPPER

If you've ever heard the phrase "debugging", it's primarily down to Admiral Grace Hopper of the US Navy. The term came from moths that used to get caught in the fans of the Harvard Mark I Computer, a system for which she wrote programs for. As well as this, Admiral Hopper was also responsible for COBOL, one of the very first computer-programming languages that's still in use to this day. COBOL is one of the earliest examples of high-level programming, which is where languages like Python, Delphi, Perl and Visual Basic all come from. As well as this, Admiral Hopper is the second woman in the US Navy's history to have a boat named after her.

8. MARK ZUCKERBERG

Like him or hate him, Mark Zuckerberg has changed the idea of social interaction irrevocably thanks to Facebook. Initially built as a rating system for college students to share pictures of one another, Facebook grew up into becoming the social media platform for people today. In the space of just twelve years, it grew from a college experiment into the single largest social networking site on the planet, with an estimated 2,000,000,000 registered users. That's two billion, by the way. As for Zuckerberg, he's just turned 32 and still has a long way to go.

.jpg)

7. TIM BERNERS-LEE

If you're reading this on a laptop, phone, desktop, tablet, whatever, it's due to a group of scientists collectively known as the founders of the Internet - one of them being Tim Berners-Lee. In March 1989, Berners-Lee and his team at CERN developed the HTML, the HTTP and the idea of a text-based browser. Simply put, they could access a web server and read a file stored on it by requesting it through TCP, or Transmission Control Protocol. That aspect of it was designe dby another founder of the Internet, Vint Cerf. Berners-Lee, however, grew out even further and developed the idea of encrypted connections, persistent connection (the first HTTP would shut down after an initial call-response) and the very idea of analytics as we know them today. It all grew from Berners-Lee and his work.

.jpg)

6. LARRY PAGE

Did you get here from Google, perhaps? You can thank Larry Page for that one. Together with Sergey Brin, Page created Google in 1998 to develop an algorithm that could scan the Internet and, in layman's terms, figure out which page was the most important and which page was the closest you were looking for. Say for example this article talks about Larry Page. Another article on a different website is doing a piece on Larry Page and links to this article. Another article still does mentions Larry Page and links to this article. Page and Brin's algorithm was able to identify that all those articles to this one particular article, meaning it must be the most popular. Therefore, when you go to search for Larry Page, this article appears on top listing because all of the other articles linking to it. That's how Google works and that's what Larry Page and Sergey Brin invented.

.jpg)

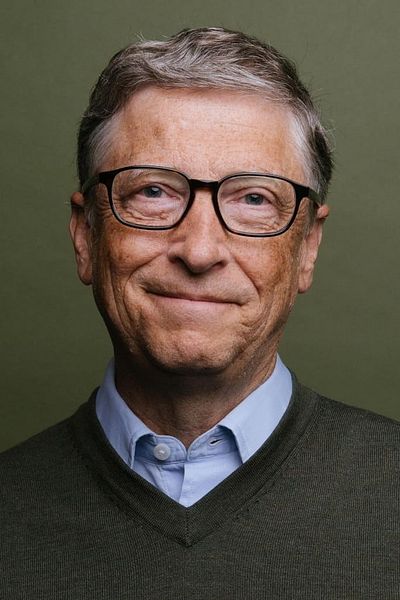

5. BILL GATES

For a man that didn't finish college and was almost thrown out of school, Bill Gates didn't do too badly. He is currently the wealthiest person in the world with a net worth of $90 billion, was the largest shareholder of Microsoft up until a few years ago and was one of the main people responsible for the popularity of personal computers in the modern age. Before Microsoft and Bill Gates, computers were the size of small rooms and were largely used for businesses, scientists and libraries. The idea that you could use a computer for something outside of this was more than a little nutty. In fact, it just didn't happen. With Windows 3.1 and its successor, Windows 95, modern computing began and so to do the birth of the Internet, smartphones, PC gaming, all of it - it all came from the popularisation of home computers; something that can be directly attributed to Bill Gates.

.jpg)

4. SID MEIER

If Shigeru Miyamoto created the idea of home console gaming, Sid Meier pushed the idea of computer games in general further than anyone thought possible. Sid Meier's Civilization was one of the most groundbreaking games ever made and truly shaped the way modern games are made. With Civilization came the idea that games could be less about clearing levels or attaining high scores and, instead, be more nuanced and strategic. It stands to Sid Meier's creation that one player managed to keep a game of Civilization II, the follow-up to Civilization, going for close to ten years with no discernible outcome. Google 'The Eternal War' and you'll know what we're talking about.

.jpg)

3. SOPHIE WILSON

As you probably know, your smartphone works off a set of chips and boards that are fused together by logic and electrodes, all of which is handled by an instruction set. An instruction set is basically the language of a computer chip. It tells it where to go, what do, what to do when it gets there and where it's going after. The electric pulse travels across the board by a defined set of instructions. Most modern smartphones use a variation on the ARM Processor, whose instruction set was designed by Sophie Wilson. That's not all. Wilson also created the BBC BASIC computer language in 1981, which was used in early computers like the BBC Micro. To this day, the ARM architecture is the most widely used 32-bit architecture. That's all down to Sophie Wilson.

.jpg)

2. STEVE JOBS

Although many would rightfully argue that it was Steve Wozniak who created Apple, it was Steve Jobs who turned it into the most recognisable brand in the world. That's no exaggeration, either. Apple is the most widely traded stock in the world and the iPhone is easily the most popular smartphone in history. Although Jobs was more known as a salesman and a businessman, it was his marketing tactics and mind for design that made the iMac, the iPhone, the iPad and the iMac as popular as they were. It wasn't just home computing, either. Jobs personally funded the Graphics Group in 1986 when it spun out from Lucasfilm's computer graphics division. It has a different name nowadays, however, that's a bit more recognisable - Pixar.

.jpg)

1. ALAN TURING

Without Alan Turing, you wouldn't be reading this. It's really that simple. Turing's groundbreaking work during World War II with decoding ENIGMA and the creation of the first stored-program computer is the foundation of modern computing. Turing's work on the desing of a stored-program computer is how we have programs on computers and phones to this day. It was Turing's theoretical work that provided the groundwork for it. Turing also created and developed the ideas and formation of artificial intelligence in computers, postulating that it was possible for machines to think and deduce in the same way a brain takes in information and processes it. To this day, the Turing test is the standard with which artificial intelligence is rated. Simply put, Alan Turing was the architect of the computer age.

.jpg)